Graphics Cards

Sources:

Titan:

https://images10.newegg.com/NeweggImage/ProductImage/14-121-923-03.jpg

AMD:

https://encrypted-tbn0.gstatic.com/images?q=tbn:ANd9GcROsfTIAKbU5dDDXgLWkhHBG6Hg6VJ3I2KG272L7xde4YdA8QHZ

What does it do?

A graphics card or graphics processor unit (GPU) is very

similar to a processor in that both are used to solved

arithmetic and logical problems. Where the two components

differ is in what types of logical and arithmetic problems

they are designed to solve. In terms of hardware, processors

usually have anywhere between one to eight cores whiles GPU's

can have hundreds of microprocessors. This allows GPU's to

carry out more tasks at once but only if those tasks are easy

and not too complex. This is where the graphics part of GPU

comes in. Typically, rendering a single polygon on a computer

is a trivial task in terms of computing power. One

microprocessor can handles this task with ease, but what if

the computer is told to draw a complex shape? One that

consists of multiple polygons?

GPU vs CPU

Lets define a shape with an arbitrary number of vertices,

edges, and faces. When a particular computer receives the

order to draw the shape, it tries to partition the shape in

such a way so that it can be drawn using three dimensional

triangles. Lets say the computer determines that two hundred

triangles must be drawn to render the shape. This information

is relayed to the GPU, which relays the information regarding

the specific coordinate location of each vertex, the vertices

that are joined by an edge, and the representation of each

triangular face to each microprocessor. The work is then

divided evenly across one hundred microprocessors meaning each

microprocessor is in charge of rendering two simple triangular

shapes. Since each microprocessor can work simultaneously

among the other microprocessors, the shape is rendered in two

iterations. Had the prior example been assigned to a processor

with four cores, it would have taken fifty iterations for the

shape to be rendered.

So if a GPU can handle more tasks at once, then what is the

point of even having a CPU?

A GPU is only more efficient when it comes to executing a

large number of simple instructions. On average, a single

microprocessor on a GPU is much slower than a core on a

multi-core processor. This means if an extremely difficult

problem is passed to one microprocessor on a GPU, it will take

a large amount of time for that single microprocessor to solve

it compared to the faster core on a CPU. In general, CPU's can

be thought of as the dedicated device that handles complex,

lengthy instructions while GPU handles abundant, simple

problems.

| What a CPU solves |

What a GPU solves |

Derive the general solution of the Laplace transform of t^5 * arccosh(at+ qt^b)*sin(ct)*e^dt using a series expansion blindfolded, in a loud room, with a jumbo sized Sharpie, on a 3x5 note card.

|

|

More Processes = More Power

While GPU's can be considerably faster than CPU's for some

tasks, they almost always take more power to function at an

effective speed. With the high end GPU's today like the Titan

X, power consumption is upwards of 250w while a high end Intel

i7 processor only takes 84w to function. In some cases, people

opt to put two GPU's in a single computer and bridge them

together in a scalable link interface (SLI). By having two

cards, the computer can share the processing load across two

GPU's making the computer faster but a lot more power hungry.

In particular, as discussed in the web page about processors,

heat becomes a major issue in this case because GPU's will not

be able to process data as efficiently when they are

overheated.

GTX Titan GPU's in Four-Way SLI

Calculating Cost

Lets say a computer running a Titan X is left on for five

hours each day for a year, how much does it cost to run the

GPU per year?

Price per KWh in Fairbanks = $0.2422/KWh

5 hours a day for 365 day = 5 * 365 = 1825 hours

So the price of running a Titan X five hours a day per year = $0.2422/KWh * 0.250KW * 1825h

= $110.5 per year

This is expensive machinery to maintain!

In the setup above, assuming each Titan X adds on an additional 250w to the total power, it would cost roughly:

$0.2422/KWh * 1KW * 1825h = $442 per year

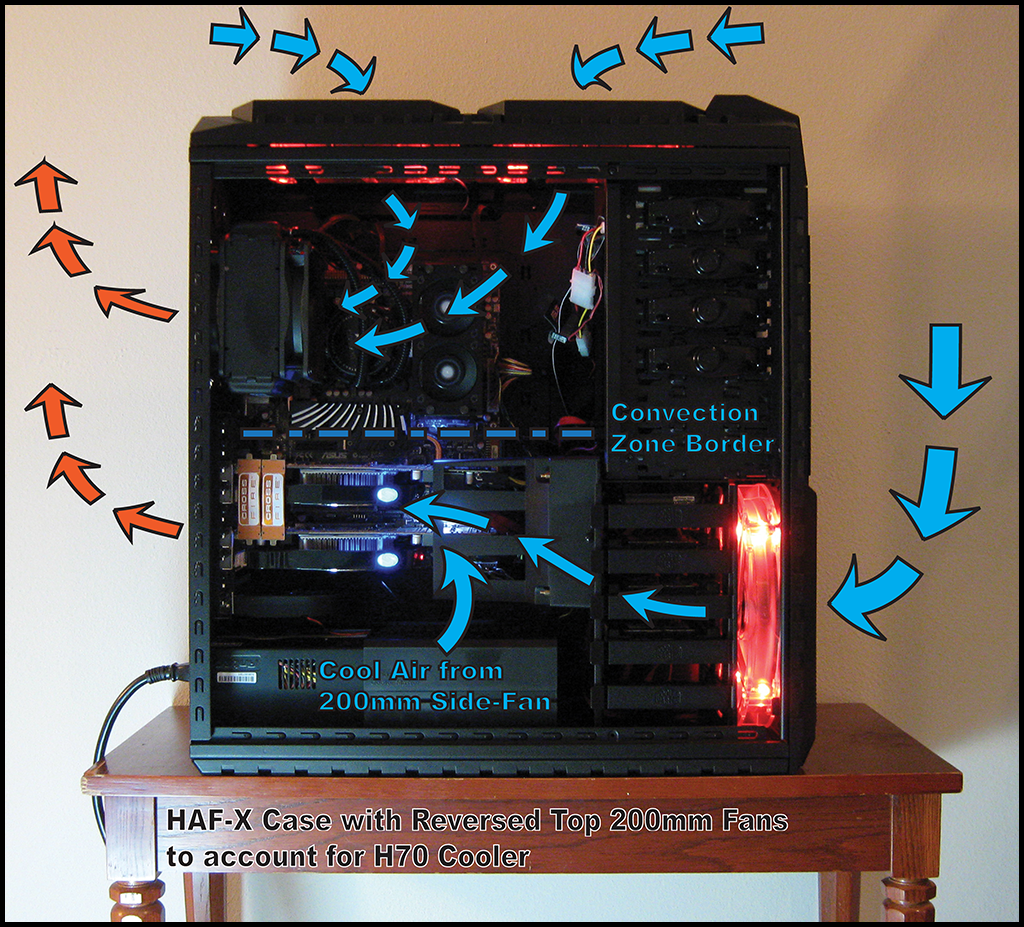

Heat Expulsion

When dealing with a setup such as the one above, convection

currents and fans are essential steps in designing a

computer that can remove heat from multiple GPU's

effectively. Below is a diagram of a HAF-X case which

intakes cool air from the outside via fans located on the

top, front, and bottom of the case. This cool air is

directed towards the two GPU's in the bottom left hand

corner of the computer case. It is then intermixed with hot

air blown off by the fans on the GPU thus causing the heat

to be transferred from the hot GPU's to the cool air via

convection. As a result, the thermal equilibrium temperature

reached between the GPU and cool air causes the GPU to

transfer heat and therefore run more efficiently. It should

be noted that in many setups, it is typical to have as many

cold air intakes as possible and only one direction for the

hotter outtake air. This setup features a convection zone

border which is a fancy name for splitting the cooling

process across two sets of intake and outtake fans. This

helps ensure the cool air coming into the computer is having

minimum heat exchange with the hot air blown out of the

back.

Picture Sources:

multiplication table:

http://www.math-aids.com/images/multiplication-drills.png

4 way SLI GPUs:

https://content.hwigroup.net/images/products_xl/293029/3/nvidia-geforce-gtx-titan-x-sli-4-way.jpg

HAF-X:

https://www.dataimage.com/jpeg;base64,/9j/4AAQSkZJRgABAQAAAQABAAD/2wCEAAkGBwgHBgkIBw